You are here:

Ultra-low-power AI/ML processor and accelerator

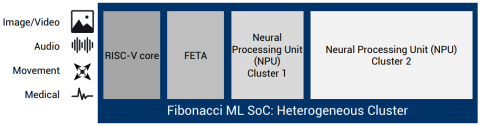

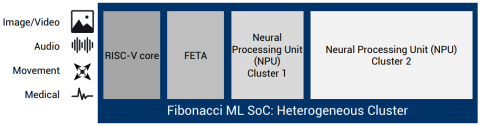

CSEM’s Fibonacci machine-learning (ML) accelerators are built on the principle of hierarchical scalability. Similar to the Fibonacci sequence, where each number is the sum of the two preceding ones, CSEM’s System on Chip (SoC) can dynamically enhance its computational power by adding accelerator resources as needed. This heterogeneous architecture includes a low-power time-series ML accelerator (FETA), two clusters of highly parallelized neural processing units (NPUs), energy-efficient on-chip memories, a versatile RISC-V microcontroller core, and a comprehensive set of peripherals for seamless system integration. Trained models can be deployed using CSEM’s ML compiler, which supports all common formats, such as ONNX.

NPU Clusters:

• Optimized for spatial neural networks (e.g. CNNs, ResNets, MobileNets)

• Sparsity exploitation

• Peak MAC performance: 160 GOPS

FETA Cluster:

• Optimized for temporal neural networks (e.g. RNNs like LSTM or GRU)

• Smart temporal feature extraction engine

NPU Clusters:

• Optimized for spatial neural networks (e.g. CNNs, ResNets, MobileNets)

• Sparsity exploitation

• Peak MAC performance: 160 GOPS

FETA Cluster:

• Optimized for temporal neural networks (e.g. RNNs like LSTM or GRU)

• Smart temporal feature extraction engine

查看 Ultra-low-power AI/ML processor and accelerator 详细介绍:

- 查看 Ultra-low-power AI/ML processor and accelerator 完整数据手册

- 联系 Ultra-low-power AI/ML processor and accelerator 供应商

Block Diagram of the Ultra-low-power AI/ML processor and accelerator

Video Demo of the Ultra-low-power AI/ML processor and accelerator

Emotion detection running from a coin cell battery

AI IP

- RT-630-FPGA Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof

- NPU IP for Embedded AI

- Tessent AI IC debug and optimization

- AI accelerator (NPU) IP - 16 to 32 TOPS

- Complete Neural Processor for Edge AI