Highly scalable inference NPU IP for next-gen AI applications

ENLIGHT Pro supports the transformer model, a key requirement in modern artificial intelligence (AI) applications, particularly Large Language Models (LLMs). LLMs are instrumental in tasks such as text recognition and generation, trained using deep learning techniques on extensive datasets. The automotive industry is expected to adopt LLMs to offer instant, personalized, and accurate responses to customers' inquiries.

ENLIGHT Pro sets itself apart by achieving 4096 MACs/cycle for an 8-bit integer, quadrupling the speed of its predecessor, and operating at up to 1.0GHz on a 14nm process node. It offers performance ranging from 8 TOPS (Terra Operations per Second) to hundreds of TOPS, optimized for flexibility and scalability.

ENLIGHT Pro supports tensor shape transformation operations, including slicing, splitting, and transposing, and also supports a wide variety of data types --- integer 8,16, 32, and floating point (FP) 16 and 32 --- to ensure flexibility across computational tasks. The vector processor achieves 16-bit floating point 64 MACs/cycle and includes a 32x2 KB vector register file (VRF). Additionally, single-core, dual-core, and quad-core with scalable task mappings such as multiple models, data parallelism, and tensor parallelism are available.

ENLIGHT Pro incorporates a RISC-V CPU vector extension with custom instructions. This includes support for Softmax and local storage access, enhancing its overall flexibility. It comes with a software toolkit that supports widely used network formats like ONNX (PyTorch), TFLite (TensorFlow), and CFG (Darknet). ENLIGHT SDK streamlines the conversion of floating-point networks to integer networks through a network compiler and generates NPU commands and network parameters. Explicitly, ENLIGHT Pro has already succeeded in securing customer upon its launch.

查看 Highly scalable inference NPU IP for next-gen AI applications 详细介绍:

- 查看 Highly scalable inference NPU IP for next-gen AI applications 完整数据手册

- 联系 Highly scalable inference NPU IP for next-gen AI applications 供应商

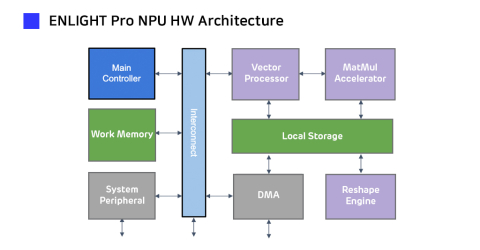

Block Diagram of the Highly scalable inference NPU IP for next-gen AI applications